Emotions are messy. That, in a nutshell, is the debate behind lots of post-apocalyptic robot fiction—as well as rom-coms, probably—featuring humanity’s ceaseless efforts to build androids that can transcend our very limited human capacities. Feeding off this energy is Silicon Dreams, which ponders the age-old, sci-fi question of android sentience and our moral obligations towards them, and how you’ll tackle this conundrum as an android yourself.

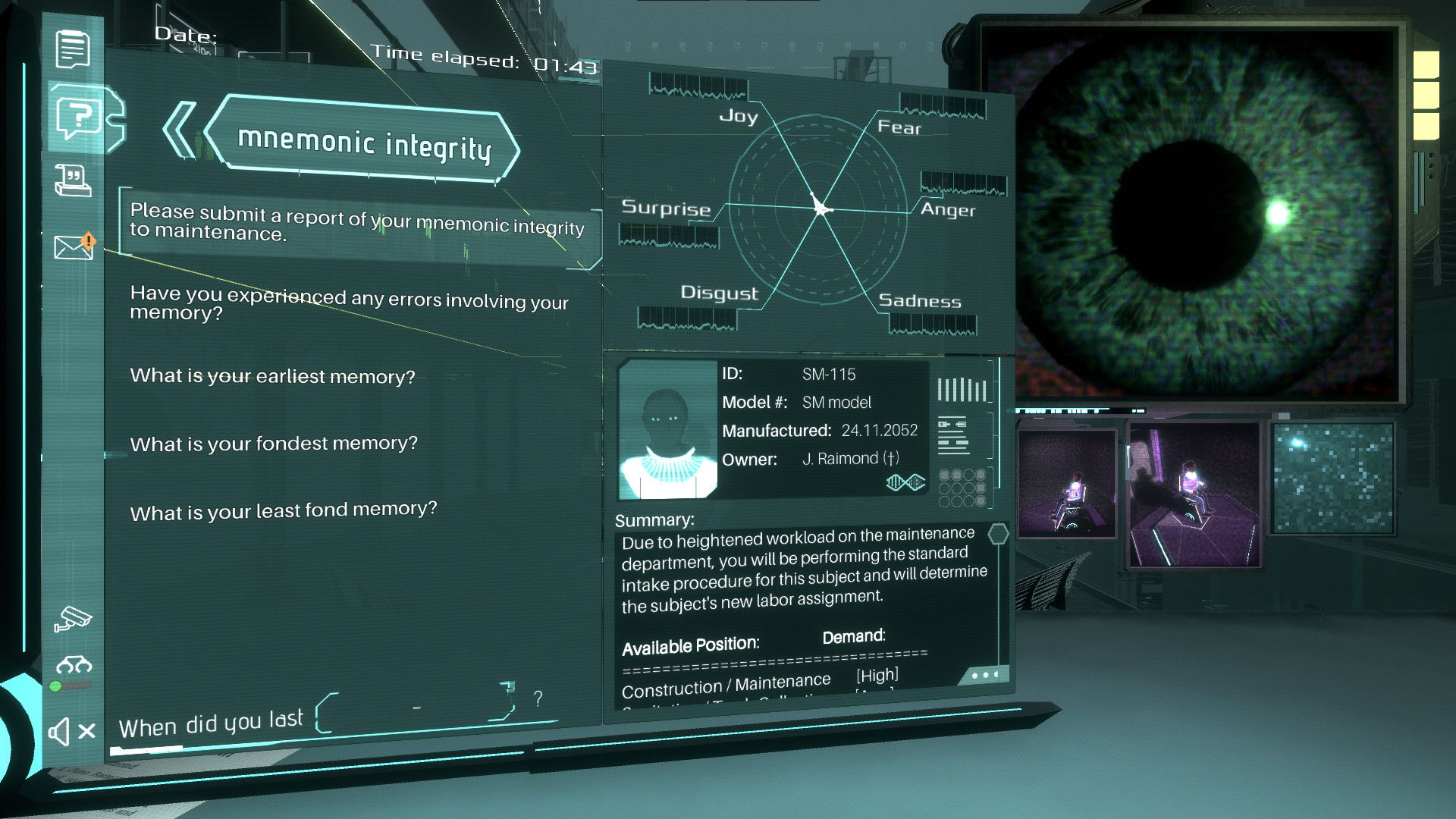

Silicon Dreams was released in April this year, but to relatively little fanfare—a shame, given the incredible depths of this heavily narrative-driven game. You play an android who is tasked to interrogate your peers—mostly androids of various models and make—and determine if they display any deviant behaviour. If so, you’ll need to decide whether they should be sent to maintenance and have their memories wiped clean, or be summarily disposed of. To that end, you’ll need to test the limits of their emotional capacity, which means seeing their ability to feel emotions that aren’t hardwired in their systems. This often entails asking the right questions and picking the right responses. In other words, your job is to manipulate your subjects into trusting you.

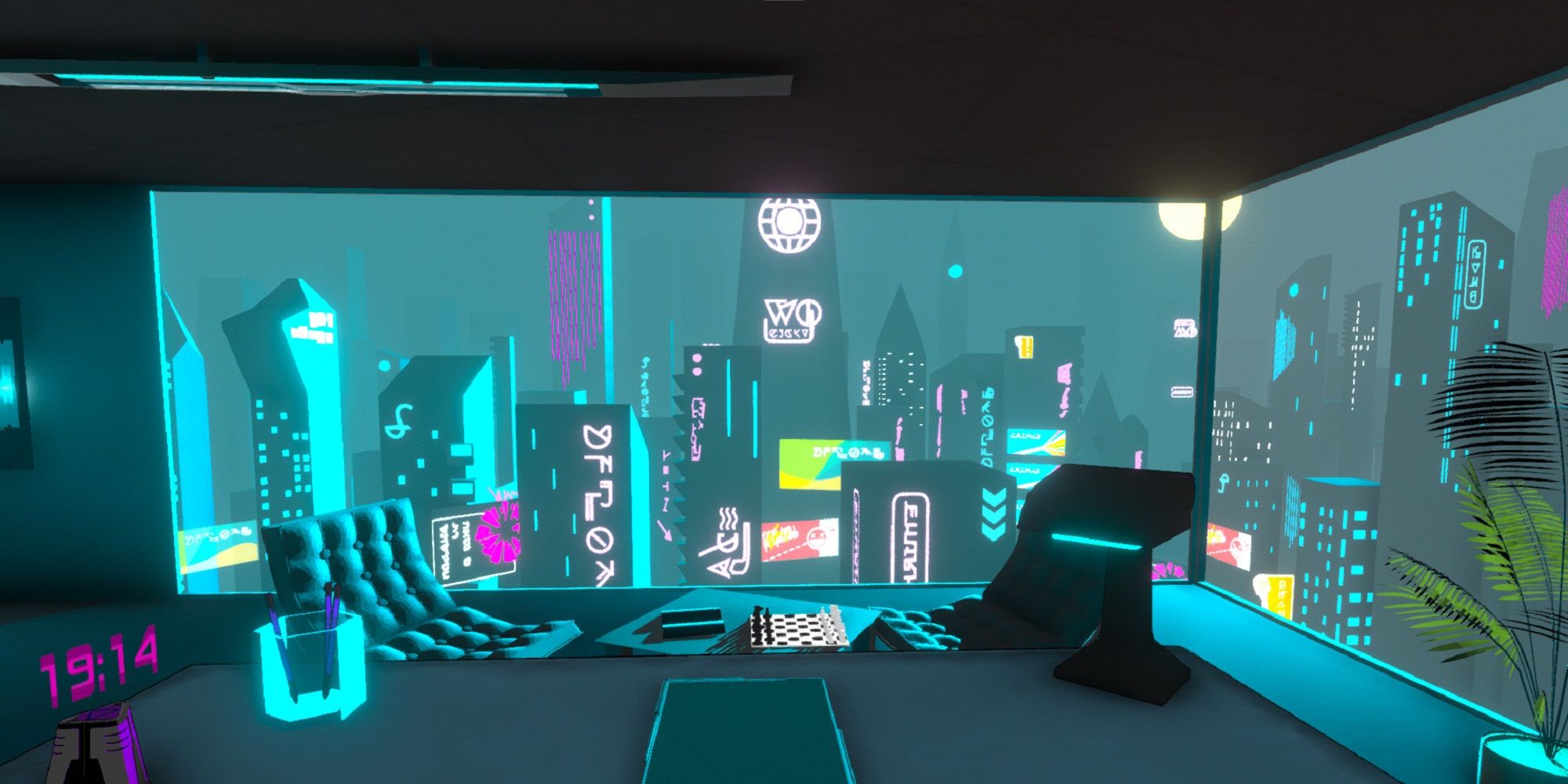

This decision is made more heart-wrenching as you uncover more about the anxieties and concerns that your subjects have through your questioning. One of them is a caretaker who has grown increasingly attached to their owner, but has instead spooked them out. Another robot, which is programmed to meet the unwavering, impossible standards of his owner, has decided to cut some corners and amend their efficiency reports, but has been deemed as a malfunctioning unit. And of course, you’ll have your own reports to fill, which will then later be evaluated by your own human employers. Make decisions that are aligned to their goals, and you can expect to chill in a cushy room during your off-hours and other benefits; conversely, make too many wrong judgement calls, and you’ll be accused of being deviant yourself, and eventually scrapped. You’re still an android, after all.

This isn’t a sci-fi story that treads much new ground, even if it continues to ask some of the biggest, most compelling questions around morality and artificial intelligence. In fact, Silicon Dreams does so in the vein of games like Localhost and Papers, Please, asking you to carry out what you’re designed to do: become a cog in this machine. Of course, there’s always going to be a bit of guilt involved as you plow through your numerous, and often very personal cases. Unlike Papers, Please, where you’re dealing with a deluge of largely faceless and unknowable crowd of migrants looking to cross the borders, in Silicon Dreams you’re learning as much as you can about your subjects before incinerating them into metal. The dissonance is as painful as it’s deliberate. You’re supposed to feel remorse—and then ponder about how that will affect your decisions after.

Here’s when our own human emotions become our biggest obstacle, preventing us from making the most logical and right decisions. In my first case, I had deliberately let the android I was questioning free just because she asked me to, and was punished for it with a low rating. I was forced to fall in line with the stern logic of computer code and be subservient to the often irrational requests of my human handlers. That’s the core of Silicon Dreams: it asks how far we are willing to go to discard our humanity, but it never lets us forget it.