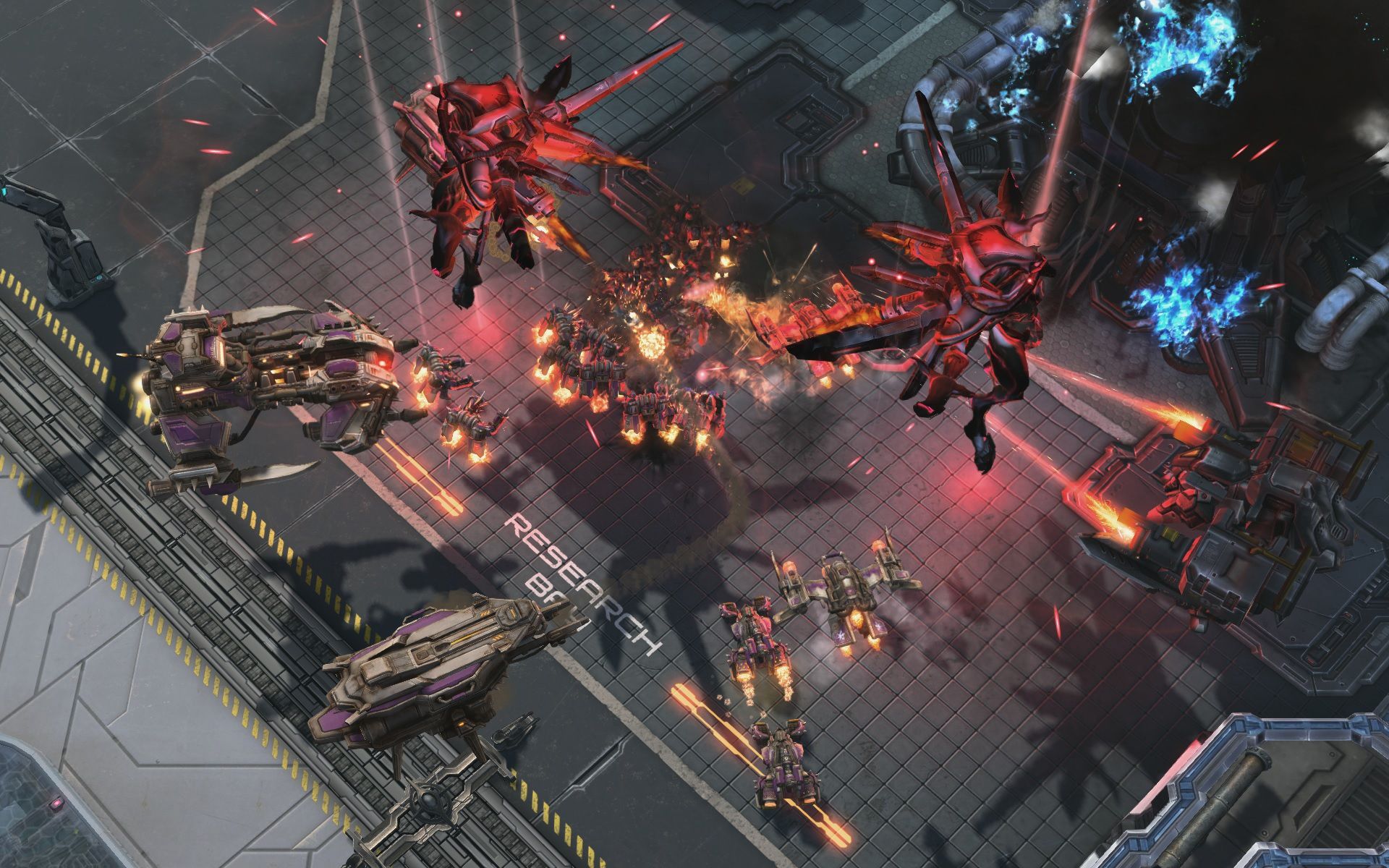

The machine revolution is coming, friends. We already knew that the DeepMind AI could beat us as Starcraft II, and now it transpires that it may have been using advanced trickery to do so.

If you’re not familiar with DeepMind, let’s backtrack a little. They’re an artificial intelligence research unit from the UK, and they’ve been doing some pioneering --and rather disconcerting-- work in the field. Most importantly (as far as gamers are concerned), they’re the creators of AlphaStar, a super-smart robotic intelligence that can play StarCraft at a level even the pros can’t touch.

Now, we all know that machines are totally capable of taking us on at our own games and winning. In 1996 and 1997, world chess champion Garry Kasparov played two historic matches against chess-bot Deep Blue, with man and machine coming away with one victory apiece. If you’ve ever seen the Terminator movies, you’ll know that it’s not the greatest idea to allow machines to advance too far, but you know what we’re like with technology.

And so we’ve arrived at this: the mighty StarCraft II pro MaNa (Grzegorz Komincz), was defeated 5-0 by AlphaStar last month. MaNa confessed that he had never played StarCraft matches like these before, indicating that we’re actually approaching SkyNet levels of artificial intelligence. The end is nigh, friends, and only Arnold Schwarzenegger can save us now.

RELATED: Multiple Blizzard Titles Celebrate StarCraft Anniversary

As damning as all of this may sound, though, there is an important caveat to bear in mind. Yes, AlphaStar won each time, but was the cunning gaming bot playing fair? Well, that’s a different matter.

As BoingBoing states, the AI was supposedly designed to be limited to feats that human players can physically accomplish. You know, just to ensure that the playing field is relatively even. While ‘competing’ in StarCraft II, though, the AI was “often tracked clicking with superhuman speed and efficiency.”

There’s the rub, then. To the average gamer, pros often look like they’re utilising superhuman reactions and reflexes, but they aren’t really. If that’s what the StarCraft AI is doing, we really can’t compete with that by definition.

The curious thing is that AlphaStar may have learned this behaviour from players themselves. Of course, it would need data on actual matches between very advanced players in order to develop its AI, and what do very advanced players tend to do? Habitually click their mice out of habit, even while nothing’s happening in the game.

“As a result,” the report goes on, “Deepmind would have been forced to lift the AI's clickspeed limits to escape this behavior, at which point it develops strategies that irreducibly depend on bursts of superhuman speed.”

This tech is a fantastic achievement, for sure, but there’s a lot of work still to be done when it comes to replicating human behaviour. We’ll just have to wait and see if pro players will ever be up to the challenge.